Press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (no equivalent if you don't have a keyboard)

Press m or double tap to see a menu of slides

Core Knowledge and Modularity

the question

‘there are many separable systems of mental representations ... and thus many different kinds of knowledge. ... the task ... is to contribute to the enterprise of finding the distinct systems of mental representation and to understand their development and integration’\citep[p.\ 1522]{Hood:2000bf}.

(Hood et al 2000, p.\ 1522)

core knowledge

‘Just as humans are endowed with multiple, specialized perceptual systems, so we are endowed with multiple systems for representing and reasoning about entities of different kinds.’

(Carey and Spelke 1996: 517)

‘core systems are largely innate, encapsulated, and unchanging, arising from phylogenetically old systems built upon the output of innate perceptual analyzers.’

(Carey and Spelke 1996: 520)

representational format: iconic (Carey 2009)

knowledge core knowledge

‘there is a paucity of … data to suggest that they are the only or the best way of carving up the processing,

‘and it seems doubtful that the often long lists of correlated attributes should come as a package’

Adolphs (2010 p. 759)

‘we wonder whether the dichotomous characteristics used to define the two-system models are … perfectly correlated …

[and] whether a hybrid system that combines characteristics from both systems could not be … viable’

Keren and Schul (2009, p. 537)

‘the process architecture of social cognition is still very much in need of a detailed theory’

Adolphs (2010 p. 759)

We have core knowledge of the principles of object perception.

two problems

- \item How does this explain the looking/searching discrepancy?

- \item Can appeal to core knowledge explain anything?

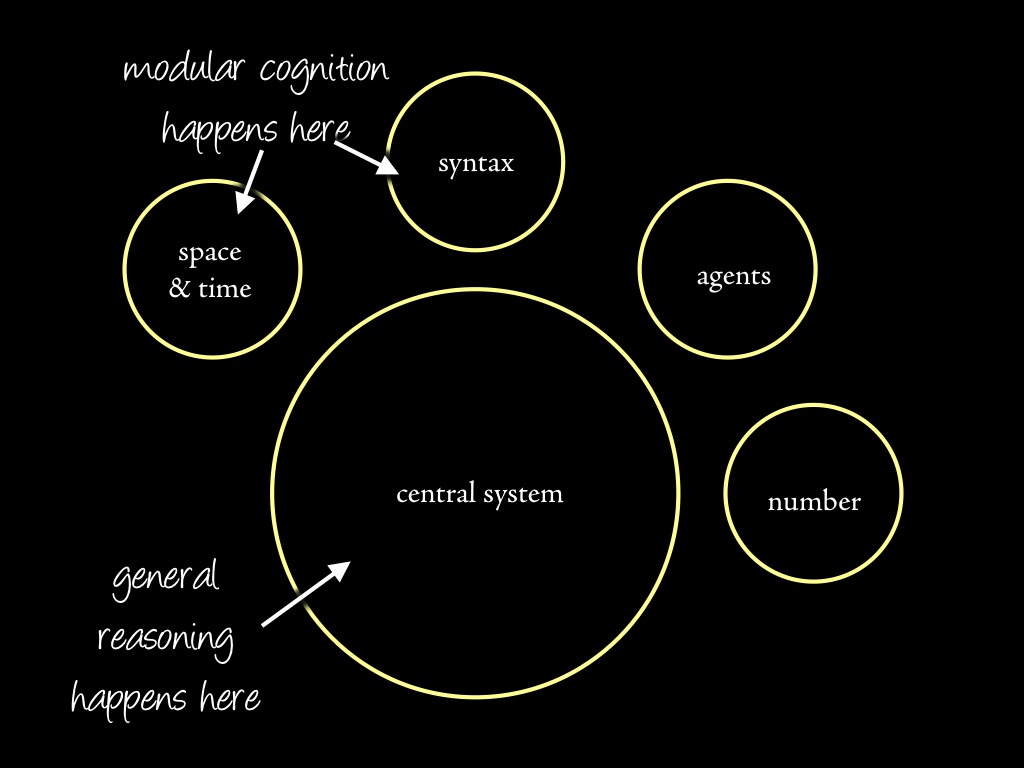

core system = module?

‘In Fodor’s (1983) terms, visual tracking and preferential looking each may depend on modular mechanisms.’

Spelke et al (1995, p. 137)

- domain specificity

modules deal with 'eccentric' bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127) - innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

core knowledge = modularity

We have core knowledge (= modular representations) of the principles of object perception.

two problems

- \item How does this explain the looking/searching discrepancy?

- \item Can appeal to core knowledge (/ modularity) explain anything?

- domain specificity

modules deal with 'eccentric' bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127) - innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

summary so far

core knowledge = modularity

We have core knowledge (= modular representations) of the principles of object perception.

two problems

- \item How does this explain the looking/searching discrepancy?

- \item Can appeal to core knowledge (/ modularity) explain anything?