Press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (no equivalent if you don't have a keyboard)

Press m or double tap to see a menu of slides

Computation is the Real Essence of Core Knowledge

the question

indirect approach

‘modern philosophers … have no theory of thought to speak of. I do think this is appalling; how can you seriously hope for a good account of belief if you have no account of belief fixation?’

(Fodor 1987: 147)

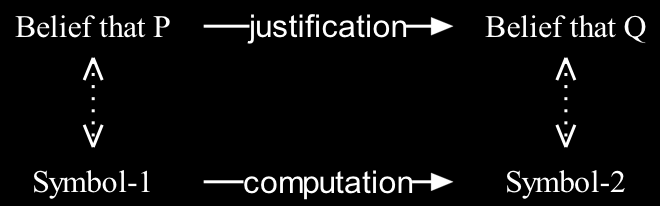

‘Thinking is computation’

(Fodor 1998: 9)

three points of comparison

- performance (patterns of success and failure)

- hardware

- program (symbols and operations vs. knowledge states and inferences)

thinking isn’t computation … Fodor’s own argument

1. Computational processes are not sensitive to context-dependent relations among representations.

2. Thinking sometimes involves being sensitive to context-dependent relations among representations as such.

(e.g. the relation … is adequate evidence for me to accept that … )

3. Therefore, thinking isn’t computation.

‘the Computational Theory is probably true at most of only the mind’s modular parts. … a cognitive science that provides some insight into the part of the mind that isn’t modular may well have to be different, root and branch’

(Fodor 2000: 99)

1. Computational processes are not sensitive to context-dependent relations among representations.

2. Thinking sometimes involves being sensitive to context-dependent relations among representations as such.

3. Therefore, thinking isn’t computation.

If a process is not sensitive to context-dependent relations, it will exhibit:

- information encapsulation;

- limited accessibility; and

- domain specificity.

(Butterfill 2007)

computation is the real essence of core knowledge (/modularity)

‘there is a paucity of … data to suggest that they are the only or the best way of carving up the processing,

‘and it seems doubtful that the often long lists of correlated attributes should come as a package’

Adolphs (2010 p. 759)

‘we wonder whether the dichotomous characteristics used to define the two-system models are … perfectly correlated …

[and] whether a hybrid system that combines characteristics from both systems could not be … viable’

Keren and Schul (2009, p. 537)

‘the process architecture of social cognition is still very much in need of a detailed theory’

Adolphs (2010 p. 759)

Fodor

Q: What is thinking?

A: Computation

Awkward Problem: Fodor’s Computational Theory only works for modules

Fodor

Q: What is modularity?

A: Computation

Useful Consequence: Fodor’s Computational Theory describes a process like thinking

core knowledge = modularity

We have core knowledge (= modular representations) of the principles of object perception.

two problems

- How does this explain the looking/searching discrepancy?

- Can appeal to core knowledge (/ modularity) explain anything?

questions

1. How do humans come to meet the three requirements on knowledge of objects?

2a. Given that the simple view is wrong, what is the relation between the principles of object perception and infants’ competence in segmenting objects, object permanence and tracking causal interactions?

2b. The principles of object perception result in ‘expectations’ in infants. What is the nature of these expectations?

3. What is the relation between adults’ and infants’ abilities concerning physical objects and their causal interactions?